Building AI powered products | Part 1: Getting Started

To AI and beyond!

The current landscape of advanced AI models has created a huge shift in what is possible through this technology. Almost every company is trying to add AI to their products or pivoting to AI-native products. While getting started with these new technologies is much easier than traditional ML solutions, it still requires some understanding of how these models work to effectively use them to build products.

In this blog, we'll discuss how we started building AI-based products at Quizizz. We will start with a basic understanding of how these models work followed by some simple and effective prompt engineering techniques.

Understanding Large Language Models (LLMs)

LLMs, such as GPT-4o or Claude 3.5 Sonnet, are language models trained on vast amounts of data. Their primary job is to predict the next word (token) in a sequence, much like an advanced autocomplete system.

LLMs analyze the context provided by previous words to predict what should come next. This predictive ability makes them effective at understanding language and following instructions or answering questions.

Most LLMs undergo fine-tuning on specific datasets, which enhances their abilities for various tasks. For example, models used by ChatGPT are further trained on conversation-based datasets to make them proficient in handling interactive dialogues.

Choosing the Right Model

Our primary use case at Quizizz was to reduce the amount of time and effort a teacher would have to spend in creating their assessment. We wanted to make sure that we use the right model that was lightweight, fast, inexpensive and is effective at generating the right content.

Here are some common options:

OpenAI’s GPT Models

Anthropic’s Claude Models

Google’s Gemini Models

Meta’s Llama Models

Smaller models are faster and cheaper than larger models but are generally less capable than larger models in complex tasks

We chose OpenAI’s GPT models while we were experimenting with integrating AI with Quizizz. GPT-3.5 was one of the first models we integrated with and gave us decent results. But as we started getting better models like GPT-4 and then 4o, we were quick to experiment and switch. We kept using GPT-3.5 for simpler tasks. We wanted to always optimize for cost without compromising on quality ensuring that teachers still had a great experience with AI.

Getting the Most from Your Model: Prompt Engineering

Prompt engineering is the art of designing effective prompts to get the best response from an LLM. A well-crafted prompt can significantly enhance the quality of the output.

Be Direct and Specific

At Quizizz, we started with a simple prompt to create questions based on the subject/topic entered by the teacher. This was one of the earliest versions we used which gave us great feedback from the teachers on how we could improve it.

It’s important to keep the prompt as simple as possible for the model to understand and then act upon. Few tips on how to be direct and specific:

Avoid ambiguous words and phrases

Specify the desired response format, the expected length, and the tone of the prompt.

Be as objective as possible.

Do not mention redundant details in the prompt.

Break down the task into smaller and easier sub-tasks.

Do not ask leading questions if you don’t want leading answers.

Instead of saying what not to do, say what to do.

Few-shot Prompting

One of the things that teachers want their students to have is mastery over what they are learning. And they usually do that by providing answer explanations after a problem is attempted. We started generating answer explanations given a question based on the techniques mentioned above. While that worked well, the answer explanations were not very consistent and sometimes had mistakes.

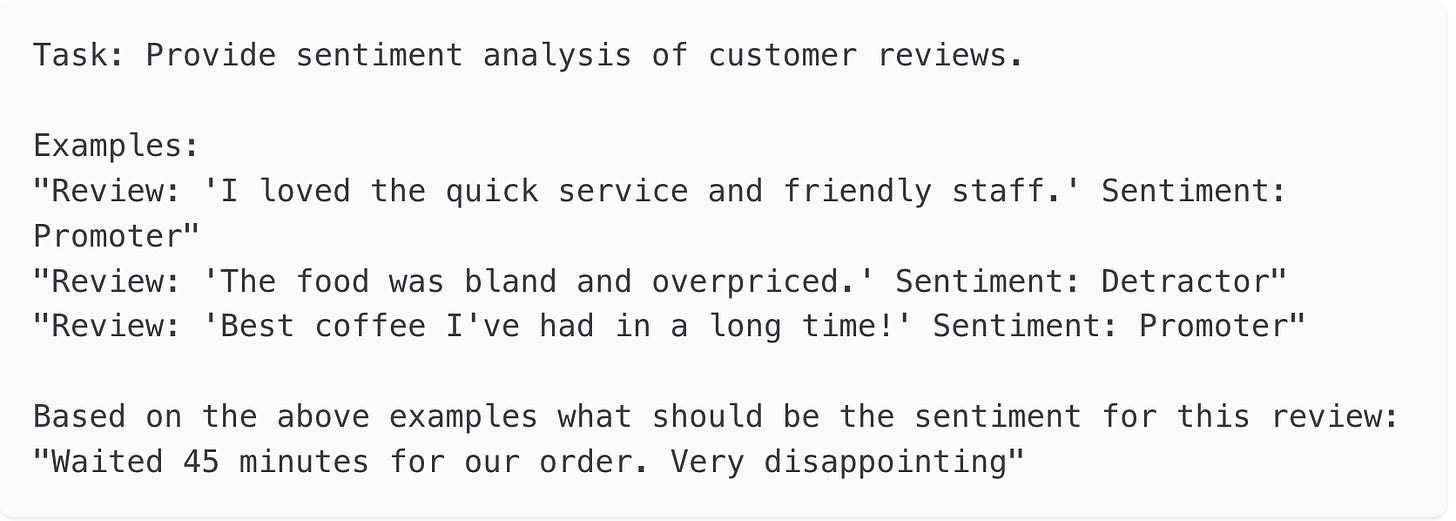

That’s where Few-shot Prompting came in. It involves providing the model with input-output pairs that it can use to infer the structure and tone of the desired response.

For our specific use case, our in-house experts categorized different types of concepts and manually created examples for those. Whenever an answer explanation is supposed to be created, we retrieve the relevant examples and pass them to the model for generating an answer explanation of the new question.

One-shot prompting is a technique where one input-output pair is provided as an example.

Chain of Thought Prompting

At Quizizz, we deal with a lot of Math-related use-cases, and one of the biggest problems with that it requires reasoning. Asking an LLM to answer a Math question often results in incorrect answers. Chain of Thought Prompting is a technique that tries to induce reasoning in the output of the model thereby helping it break the complex task into multiple smaller tasks that it is good at.

Asking a model to “Think step-by-step” or “Explain the reason before answering” are a few ways to do Chain of Thought Prompting. We can also use Few-shot Chain of Thought Prompting where the examples help the model on how the thought process should look like.

Conclusion

The first step to building an AI-based product is building a basic understanding of the foundational technologies and learning how to utilize them effectively. This article should provide a great starting point, and with careful prompt engineering, you can leverage these models to create compelling AI features. Everything boils down to experimentation and figuring out the right model and prompt which works for your use case.

In the next part of this series, we will go beyond the basics of prompting to explore advanced techniques that make AI products more capable and reliable.

If you’re interested in leveraging AI to build products that improve the daily lives of educators and learners, we want you! We’re looking for backend and frontend engineers who have a high sense of ownership, are curious, and are willing to step into the world of a fast-paced startup. Apply to the open roles using the link below.